Hello friends, old and new! If you like what you read, please share the newsletter with your friends. Just click the button below. This week, I talk about a company that was on the news, NVIDIA. -Senthil

Christopher Plummer, who played Baron von Trapp at age 34 in the film The Sound of Music (1965), hated it almost all his life, and apparently reported to have said “It was so awful and sentimental and gooey”. Later, in his old age, attending a children’s Easter Party, he noted in his memoir, “The more I watched, the more I realized what a terrific movie it is," and added it was, “[…] warm, touching, absolutely timeless”.1

Commencements are a bit like that. The warm, touching, and philosophic chiseling of our imperfections. It is hard not to embrace how it captures the fleeting happiness of our fragile existence.

One of the best things about having an academic job is that I get to partake in the happiness of such graduations every year — absorbing in sounds and sights of a special event that happens less than a handful of times if it does in our life. In June, hope beckons, as high schools, colleges, and middle schools celebrate and families look forward to the hopefulness of the future. It took a long while for me to understand why graduations are called commencements.

With the advent of the internet, we can now celebrate commencement addresses all over the world. This year, again, I listened to some exceptional speeches. I would be remiss if I didn’t mention my colleague Tinglong Dai giving a remarkable speech (transcript in the link) at the doctoral graduation at Carnegie Mellon University, that touches upon his life as a middle child in China.

June is a good month to talk about Asia — a focus of this blog. So, I start the NVIDIA discussion with a commencement speech by Jen-Hsun “Jensen” Huang — the founder and CEO of NVIDIA. Jensen Huang emigrated to the US at age 9 with his family and grew up mostly in Oregon. He attended Oregon State University for an undergraduate degree in Electrical engineering (We love engineers in this newsletter!) and got a master’s degree at Stanford.

Mr. Huang usually rocks a suave leather jacket, but he gave that up to don a robe at the commencement speech at National Taiwan University - NTU, which I want to focus on, in the process of explaining to myself NVIDIA as a platform, and as an engineering wont, also examine its role in Supply Chains.

Here is the speech (Youtube, 20 min, in English) on YouTube. (Youtube often takes down unofficial links, and I haven’t found the official NTU link — do help me if you come across the official link!).

Commencement speeches are always in triptychs — the 3 messages of “failure and retreat” in Jensen Huang’s talk, very much reminded me of Steve Jobs Stanford graduation talk of 3 stories (connecting the dots; love and loss; and finally, death).

1. Admit Mistakes and Ask for Help

In this address, Huang talks about building a gaming console for Sega. NVIDIA was nearly bankrupt and was forced to give up the contract with SEGA. “Confronting our mistake and, with humility, asking for help saved NVIDIA,” he said. One of the things that I have been trying to do post-pandemic is to acknowledge and admit failures better.

2. Staying Patient

The second decision was to put NVIDIA’s brainchild platform CUDA2 (abbreviation of ‘Compute Unified Device Architecture”) into all the company’s GPUs, enabling them to crunch data in addition to 3D graphics. These data crunching capabilities were often seen as an “overkill” and there was “immense pressure” from the shareholders for not focussing on profitability. Eventually, machine learning techniques have advanced sufficiently enough that we finally have the need for CUDA-powered GPUs to be used.

3. Being Open to Unknown Possibilities

The third ostensible mistake was attacking heavily the promising mobile-phone market as graphics-rich capabilities were coming into reach. In fact, in the 2009 commencement address (7 mins) to Oregon State University, Huang talks about NVIDIA as a company that is present in the boundary between technology and art and seems to suggest betting heavily on graphics and mobile. However, the market commoditized quickly. Luckily, applications for computing have now diversified.

Understanding NVIDIA from Three Angles

To understand the enormous success of NVIDIA in recent years — and the explosive growth in market capitalization with the AI buzz, it may be useful to look at what they do.

In the triptych style, I am going to try and explain NVIDIA in three ways. (At this point, all errors remain mine; In demystifying tech, there are always approximations. So, write to me if you would like me to elaborate a concept further, or refine something better.

1. NVIDIA as a Platform for AI applications

I think it is useful to first consider a product that has been an unqualified success for NVIDIA: CUDA.

What is CUDA? I am going to try and write in the simplest way I understand it.

CUDA is often thought of as a programming language or extension, which it is. It is also considered a library, which it is, as developers can write and incorporate extensions into their programs in C/C++/Python and even Fortran! (At the cost of dating myself, the first language I learned when I first saw a computer in the mid-nineties).

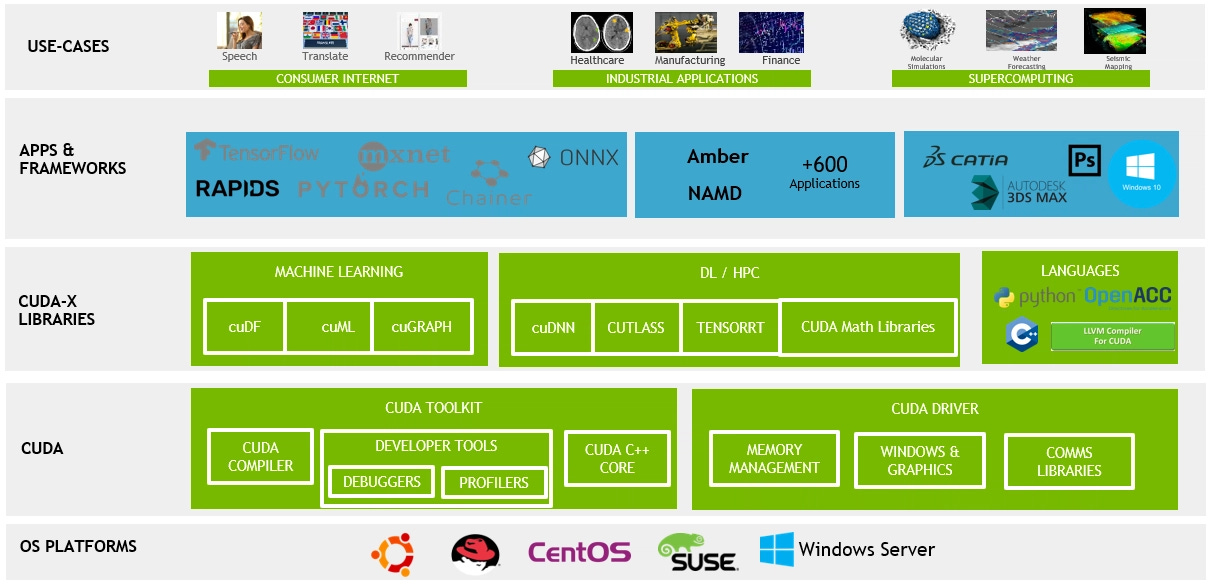

It is most helpful to think of CUDA as a platform where apps can be matched with the operating system to run specific use cases. This is incredibly powerful! Programmers (and even amateur programmers) can reach into the video card capabilities, with the programming languages they know, to access the parallel computing capabilities of NVIDIA.

For NVIDIA, building this out entirely by themselves, has been advantageous as this platform offers a way to improve the capabilities of their chips by continued engagement of programmers. This has helped NVIDIA to further the capability of the CUDA toolkit and its Graphics Processing Units (GPUs). For example, their GPUs have helped in cutting down the deep learning training time on a Tesla (say 15 hours to 5 hours) and using cuBLAS (accelerated version of BLAS - the Basic Linear Algebra Subroutines from Fortran 90) to run faster training for deep learning algorithms. (Yes, it is all linear algebra, all the way down).

In my field, Operations, there has been a steady growth of deep learning algorithms for a variety of applications, including design, assortment planning, and inventory management. (Think of them as use cases in the above picture).

2. NVIDIA in Hardware: CHIP Supply Chains

NVIDIA in 1999 introduced the GeForce 256, the first graphics card to be called a GPU. At the time, the principal reason for having a GPU was for gaming. It wasn’t until later that people used GPUs for math, science, and engineering.

As Huang’s commencement talk alludes to, CUDA has been a long bet along with its GPUs. With chips alone (without CUDA), we can’t do high-performance computing. CUDA alone can’t be a platform without high-quality hardware. NVIDIA’s dominance in AI still depends on the supply chain.

The advantages due to CUDA have arrived at a perfect time as (a) high-performance computing is exploding in demand and (b) we are hitting against the physical limits of chip making.

To illustrate (a) For instance, ChatGPT uses NVIDIA's A100 GPU with the Ampere GA100 core. Without getting into technical details by doing a semiconductor deep-dive, I’ll mention that with good reason NVIDIA argues that it can improve the performance 8 to 9 times with their new H100.

To emphasize (b), Moore’s law predictions on single-threaded performance increase of CPUs have slowed down in recent times. We are hitting manufacturing constraints at the forefront of physics: chip yield during the manufacturing process is harder to improve and the heat limits on clock rates are constrained. Hence, it is often discussed that GPUs have thermal throttling.

In any case, it is worth pointing out that NVIDIA GPU dominance is propelled by their chip manufacturer TSMC — the company we looked at in #10: TSMC Story. Working on the other side of the platform with their suppliers, NVIDIA unveiled cuLitho, a software library designed to accelerate computational lithography workloads, thus speeding up the chip manufacturing processes.

Every day, prophets are screaming on Facebook walls and Twitter tenement halls — that AI risk is existential, and it is worth shutting down AI research. But, the existential risk for AI development is that it depends on supply chain fragility. China-Taiwan cross-strait problems and singular dependency on TSMC manufacturing and ASML machines are bigger existential risks for AGI to be even born.

3. NVIDIA in Crypto land.

Finally, the crypto bubble was a big boost for NVIDIA. In fact, there has been a skyrocketing demand for NVIDIA chips for solving proof-of-work equations.

I don’t have much to say about Crypto in this essay — or maybe for a while — but NVIDIA and TSMC are the only companies I know that are at the forefront of being fundamental to both AI and Crypto.

Wrapping up…

We are indeed living in interesting times. LLMs, AI, and Deep Learning have tremendously increased the consciousness of programming, and the scale of computation in the world. But, amazingly it depends on physical hardware, and manufacturing supply chains have never been more important.

I want to leave you with the words of the economist Brad de Long:

[…] using a significantly improved process of turning sand into something that can come damned close to thinking with parts four nanometers on a side in the most complicated human manufacturing and division of labor process ever accomplished.

So complicated that in fact, there are only two productive organizations, each of which is absolutely essential for it, one of which is the one in the Netherlands that makes the extreme ultraviolet machines, and the other one is the one in Taiwan that knows how to actually use the extreme ultraviolet machines to carve paths for electrons to flow in stone made of sand.

As Arthur C. Clarke said, Any sufficiently advanced technology is indistinguishable from magic.

It is an amazing world really!

https://www.today.com/popculture/christopher-plummer-had-thorny-history-sound-music-t208239

https://blogs.nvidia.com/blog/2012/09/10/what-is-cuda-2/

This was a great read. Your transitions (e.g., from commencement to NVIDIA) were very smooth as always.

I have also been thinking for sometime now, Senthil, about the limits on yield and productivity in chip manufacturing. You mention a few.

I wonder how will software change the physical shape and concept of a chip in times to come?